Digital security training is a critical activity for journalists and activists. With online attacks, harassment, surveillance and censorship on the rise, it’s increasingly important to both raise awareness among those affected and to equip them with tools and techniques to ensure they can effectively do their jobs.

Digital security training is led by many different stakeholders who reside as trusted leaders in their communities and among their networks. Training is subject to look very different depending on where participants live and work. Their context, along with their exposure to risk, greatly influence what type of training they need.

Training is often procured in partnership with an organization who can provide guidance, training material, and capacity building for trusted leaders. This partnership is a continual learning process, and the sponsors and trainers alike need to evaluate the effectiveness of the work. Monitoring and evaluation (M&E), are integral parts of program management. M&E functions to:

- Help trusted community leaders develop professionally in their skills to train diverse participants in ever-changing digital security contexts; and

- Help organizations build stronger programs and secure funding for continued work.

From late 2022 through 2023, Okthanks partnered with Internews’ Journalist Security Fellowship (JSF) to bring refreshed thinking to traditional practices of Monitoring and Evaluation (M&E). The case study that follows will give insight into the thinking behind a M&E methodology used for scenario-based digital security trainings conducted under JSF, a program focused on increasing the adoption of digital safety practices among journalists in Central and Southeastern Europe (this case study will focus solely on activities in Albania, Bulgaria, and Croatia). It also provides a summary of the details with links to the complete reusable resource.

Case Study: Measuring the Effectiveness of the Journalist Security Fellowship (JSF) Trainings

Overview

Sixteen fellows from Albania, Bulgaria, and Croatia were sponsored by Internews to bring digital safety training to journalists in their communities through JSF. The overall objective of the program was to increase the adoption of digital safety practices among journalists in these countries, helping them become safer and more effective in their jobs. The fellows were trained in facilitating scenario-based training methodologies including tabletop scenario exercises (designed by the fellows themselves), a virtual reality (VR) scene simulating digital security challenges during cross-border travel, and a technical simulation using Canary Tokens to imitate malware. The fellows then organized and facilitated scenario-based trainings for other journalists in their communities. With guidance from Internews, fellows were responsible for collecting M&E data on their trainings and integrating learnings to improve upon their trainings.

With an aim to increase adoption of improved digital security practices among training participants, the main indicator selected to evaluate the program was: the % of participants who reported adopting digital safety practices after the training.

An example of the data collected by a fellow and used by the JSF program team to evaluate the effectiveness of training is outlined in the table below. In addition to this data, fellows were encouraged to document other insights observed and generated from the M&E activities in a narrative report. Details on which practices were and were not adopted by participants were also collected and shared.

Evaluation Data

| Indicator: Participants who… | Number |

|---|---|

| Can describe a step they can take to improve their digital safety | 8 (6 female, 2 male) |

| Reported improvement in their digital safety practices | 8 (6 female, 2 male) |

| Rating of training method (1=not useful; 5=very useful) | 4.89 average |

Objectives of the M&E Approach

Several objectives guided the development of the JSF M&E approach for scenario-based trainings. First, however, the design needed to overcome the hurdles presented by traditional M&E methods for data collection. Namely:

- The difficulty in collecting longer-term data and learnings on adopted practices/behaviors

- Survey fatigue and low survey response rates

- Concerns about how usable methodologies are for fellows conducting M&E

Further, with traditional methods, the team saw missed opportunities in M&E to integrate into and enhance follow-on activities. Knowing these hurdles and anticipating potential opportunities, the design aimed to strike a balance between the ease of implementing a plan and the ability to gather rich and relevant information. The primary objectives are outlined below, along with a summary of how they were addressed.

Objective 1: Try out new ways to collect long-term data

Following up with participants after a training session can be difficult. Historically, it has been a challenge for trainers to get responses to a follow-up survey from participants afterward. Follow-up interviews, another plausible approach, are effective but often require substantial investment to facilitate and procure. To understand if digital safety practices are adopted, it’s required to engage with participants again after the training. The approach needed to explore alternative methods.

How it was addressed: The team and fellows considered structuring the training sessions into two parts: to have two in-person sessions. However, it was going to be difficult for everyone to implement within the program constraints. Instead, fellows suggested using Signal groups to connect with participants online after the training is over. Inspired by this notion, the M&E approach includes an Online Follow Up as a core component. The Online Follow Up sets the stage for trainers to ask participants questions 3 weeks after the training.

Objective 2: Increase participation

As mentioned above, participation in surveys and interviews after the training is a persistent challenge. With post-training surveys specifically, the team has acknowledged that they have the tendency to trend toward bias, as it’s usually only the people that had a good experience that decide to fill out a follow-up survey.

How it was addressed: In order to increase participation and responses from everyone in the training, M&E activities were integrated as core training components. The approach aimed to create activities that were implementable within the in-person training time, or as an extension of the training itself via the Online Follow Up. While participation in the Online Follow Up is optional, its purpose was to create a space that offered value to participants, rather than strictly serving the purpose of M&E needs, like typical surveys do.

Objective 3: Motivate participants to engage in the topics beyond the training

Engaging in the topic beyond the training is crucial for the program to be successful in helping journalists adopt new practices. While some light touch digital safety practices can be easily adopted in the training itself, many require making adjustments to how an individual or organization functions over time. Further, for the purpose of M&E, collecting long-term data requires following up with participants at least a few weeks following the training.

How it was addressed: In order to motivate participants to engage again beyond the training, the topic had to become important to them. As part of the M&E approach, a writing exercise is used to help participants discover and define for themselves why digital safety is important for them on a personal level.

Without consequence or priority assigned to a choice, it’s unlikely that any change or uptake of a new practice will happen. The strategy behind the writing exercise was simple: to help participants set digital safety as a priority by helping them realize what it can do for them.

Following this, trainers were encouraged to establish Signal groups or alternative online spaces to connect with participants after the training through the Online Follow Up. These groups help trainers be more effective. They give them space to reinforce the concepts and skills taught, to answer questions and to provide additional resources. They also serve as a place for participants to connect with each other.

The development of the M&E approach was guided by three objectives:

- Try out new ways to collect long-term data

- Increase participation; and

- Motivate participants to engage in the topic beyond the training

With this, the team saw an opportunity to use M&E to integrate into and enhance the overall training. The next section outlines how M&E was integrated into the training and dives into the activities for data collection.

M&E for JSF

Each JSF training was unique due to the way the fellow defined the learning objectives for the training. Learning objectives are specific, measurable statements that clearly define what learners will know, understand, or be able to do as a result of a training program. They provide a clear direction for both teaching and assessment. For this set of trainings, fellows selected the scenario-based activities to use along with the topics they would focus on and 3-5 corresponding skills. The learning objectives set the course for the training. With an M&E goal to collect long-term data, the data collected by the follow-up questions were tailored around learning objectives.

While unique in their learning objectives, JSF trainings shared a typical structure. The M&E activities were integrated as you can see in the outline below.

- Introduction: Overview of the agenda, learning objectives, etc.

- Conducting Training Activities: Scenario-based training activities and traditional training on digital security concepts

- Feedback on the scenario-based training method (M&E)

- Writing Exercise (M&E)

- Online Follow Up (M&E)

Let’s dive into the three M&E activities.

1: Feedback on Scenario-Based Training Methods

Short description: A brief survey that can take place in the physical space of the training just before or during a break.

Objective: Get insight into how useful the training method was in helping people learn about digital safety.

When it happens: During the training

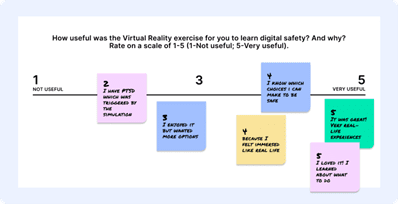

A simple wall survey during the training can lend a lot of insight and be easy to integrate. To get feedback on how well the scenario-based training technique was received, participants take a 5 minute break (perhaps before a coffee break) and rate their experience. The objective of this activity is to learn from participants about how useful the scenario-based training method (VR scenario, tabletop scenario exercises, technical simulation) was for them in learning digital safety information. The visual below demonstrates one way to conduct this survey using post-it notes.

2: Writing Exercise

Short description: 10-15 minute exercise where participants are asked to write a reflection in response to 3-4 prompts.

Objective: To personalize the topic. To help people identify why it matters for them.

Note: We also used this exercise as an opportunity to help trainers define actionable steps.

When it happens: During the training

The writing exercise is an activity for trainers to coach participants in finding their why; to define for themselves what digital safety can do for them. The prompts below are used by trainers to facilitate:

- Write 2-3 sentences describing why digital safety could be important for you.

- Think about what you just experienced and/or learned in this training. Which part of it applies to you?

- How will you use what you learned today in your life?

- In which situations will it be important for you to use something you learned about digital security?

- Next, share: One step you can take after today’s training to improve your digital safety.

Here’s an example response:

There are several tips (practices) I can implement in my habits and in the ways I use my phone and computer that can not only help keep me safe, but also make me more effective and build trust with my contacts. The most relevant thing for me to do is to use Signal when I can to communicate. Then find ways to anonymize the contacts I talk to over text, email and WhatsApp. One step I will take is to…Try to anonymize the contacts I have in my phone that I can’t communicate with via Signal.

3: Online Follow Up

Short description: An extension of the training

Objective: To create a place for trainers to share additional information, to answer questions from participants, and to ask participants follow up questions about adopted practices.

When it happens: Just after the training and 3 weeks after training

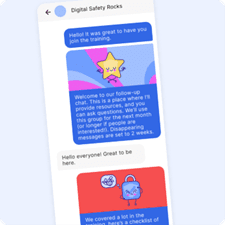

The follow up channel is an online space (for example, a Signal group chat) for trainers to connect with participants, share resources, and answer questions after the training. Beyond providing further assistance, the purpose of this space is to expose participants to the information again and to make it easy for them to take action on the digital safety practices. Lastly, the follow up channel is a launchpad to facilitate online polls in the weeks or months following training, which can help in gathering long-term post training outcome data.

A conversation guide was provided for fellows. It contains sample language for trainers to use to check-in with participants, remind them of practices they can adopt, and to run polls. The table below shows an example of what’s provided in the full resource. It represents a small subset of the guide. The guide also includes a complimentary ‘Digital Safety Rocks’ illustration pack that’s filled with images that can be sent with the messages to make the follow-up experience more engaging.

| Type of Message | Sample | Tips / Instructions |

| Check-in: Review of skills | We covered a lot in the training, here’s a checklist of the digital safety skills we covered.Skill 1Skill 2Skill 3 | Tailor this message so that your checklist covers the skills focused on in the training. |

| Nudge / Opportunity to act | One easy first step is to … Here’s how: … | Use graphics to spark interest, or screen records to demonstrate how to do the thing. |

| Poll | Since the training, have you adopted the habit of logging into your accounts with 2FA? If yes, react with a thumbs up 👍. If no, react with a thumbs down 👎. If you were already doing this prior to the training, react with a ⭐. | Tailor this message to the skill you’re asking about. If multiple skills are covered, ask about them in separate messages so that it’s easy for people to quickly respond. |

For more details on the recommendations for hosting a follow up channel and poll after a training, refer to the Follow Up Guide (available for download on this page). It was developed for the JSF program, but can easily be adapted for any digital security training.

Conclusion

This JSF M&E approach for scenario-based training was implemented by 16 fellows in 17 sessions for 139 journalists and journalism students in Albania, Bulgaria, and Croatia. It was designed to be easily implemented and integrated as a core programming element. It aims to overcome the existing challenges of traditional methods. It is intended to upskill trainers and help them learn how and where to improve, while simultaneously giving sponsors reporting data and confidence to evaluate and make decisions about the program.

Additional Resources:

- Monitoring and Evaluation for Digital Security Training: This report contains the STAR Framework (page 59), a comprehensive guide for program managers and trainers to formulate a tailored M&E plan. This plan along with the research was the original source of knowledge and inspiration for the JSF fellowship M&E approach for scenario-based training.

- Sample M&E guidance materials used under JSF are available for download on this page: